In a world where the digital landscape evolves quicker than a chameleon on a disco dance floor, tech giants like Google are making bold moves.

Recently, Google announced that Chrome will bid farewell to third-party cookies. An interesting development, but let's park the technical banter for a moment and ponder a simpler query: Does the average surfer of the world wide web truly comprehend the essence of cookies, not to mention their privacy implications and the safety net available to them?

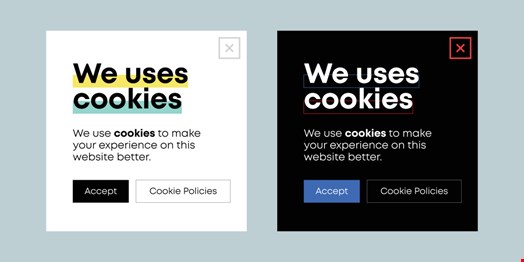

The crux of the matter is that most people want their technology served up hassle-free. They're more likely to dash through pop-ups and tick boxes faster than you can say 'cookie consent,' all in a bid to dive into the content they crave. This sprint towards convenience, however, leaves a trail of data breadcrumbs that could easily be scooped up inadvertently into the wrong hands.

Why does this happen so often? It's not for lack of privacy features embedded within our apps and browsers. More accurately, these features are buried like treasure under layers of menus, and coded in a dialect that might as well be hieroglyphs to the layperson.

Expecting the average person to decode these digital maps without a primer in cybersecurity is akin to asking someone to pilot a plane without any flight training. Without a fundamental understanding of the basics, how can we then expect users to make enlightened decisions amidst a sea of online risks?

Herein lies a hefty slice of responsibility for online providers of social media platforms, other apps, and of course browsers like Google, which should also step up as providers of knowledge. Imagine if, rather than just handing out maps, they highlighted potential pitfalls and treasures alike. By equipping users with insight and clarity, they'd be empowering them to navigate the cyber seas with a sharper sense of the risks involved.

The Cybersecurity Industry Must Take More Responsibility

Diving deeper, it becomes apparent that the cybersecurity industry itself is somewhat complicit in muddying the waters. We have a penchant for swirling around in a whirlpool of esoteric jargon and acronyms that would bewilder anyone not inducted into our secret society. While we're focused on fortifying the walls and sharpening the spears, it's often at the expense of user understanding.

One of the main criticisms of security controls from a user perspective is the seeming lack of empathy or understanding of their needs and requirements. Part of this sometimes comes down to how messaging is delivered, which often focuses on the negative instead of the positive.

For example, during a password audit, it can be far more encouraging to a user to tell them that 80% of their passwords are strong and meet requirements. However, oftentimes, the messaging which goes out from security teams focuses on the 20% of passwords which fail to meet the standards. This can be disheartening to hear and doesn’t foster positive behavior in the future.

"Sometimes security policies don’t take into consideration real life challenges of people"

Another oft-overlooked aspect is that sometimes security policies don’t take into consideration real life challenges of people. Say you hopped on a boat, and the captain makes an announcement reminding everyone that being seasick was forbidden and against the terms and conditions of getting on the boat.

This is how some security policies feel to some users. Rather than understanding that seasickness is a real thing that affects everyone, the policy simply mandates that being sick is not allowed. Instead, sick bags or anti-sickness medication could be provided to demonstrate empathy towards the customers and make it an inclusive environment.

The Curb-Cut Effect is a good example of how when initiatives are designed to benefit vulnerable groups, they often benefit all of society. Adding cuts to curbs is incredibly useful for disabled people. Yet it’s not just people in wheelchairs that it helps. Curb cuts also makes life easier for people pushing children in strollers, using trolleys for deliveries, pulling a suitcase, those wheeling bikes or on skateboards, and it also helps save lives by guiding people to cross at safe locations.

Another example is adding closed captioning to TV that helps anyone watching in a noisy bar, a waiting room, or watching an airline safety video.

Similarly, security policies and controls should consider not just technical users, but non-technical ones too. By focusing on those who are less familiar with technical terms one can create an environment that is more secure for everyone.

Ideally, security should be the anti-lock braking system (ABS) of the digital realm. Car drivers don’t need a manual to understand ABS; it simply works in the background, ensuring safety without asking them to alter their driving habits. Similarly, it’s unrealistic – and frankly, unfair – to expect every user to morph into a cyberspace sentinel.

Instead, what we can (and should) do is design systems that are intuitive in use and easy to understand. Supplement this with security awareness and training on best practices not through scare tactics but through encouraging positive behavioral change. In doing so, we can cultivate a robust security culture that embraces all users, irrespective of their technical skills.

Our focus should not just be on the innovation of security mechanisms but on making these mechanisms accessible and comprehensible. Only by demystifying cybersecurity and weaving it seamlessly into the user experience can we hope to strengthen our digital habits and create a culture that is savvier, safer, and inclusive.