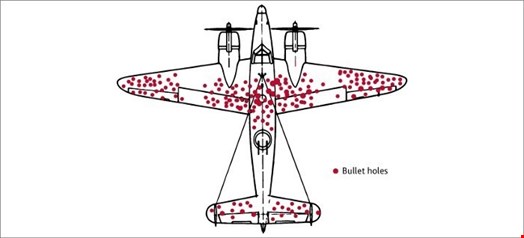

The concept of survivorship bias can be best illustrated by a short history lesson from World War II. One of the biggest problems the Royal Air Force and United States Air Force faced was how to prevent their aircraft from being shot down. Fixing armour made the aircraft heavier with reduced range, so a solution was only armoring the most essential areas. Mathematicians and scientists identified that the majority of bullet holes were in the fuselage and very few were in the engines, which is why they initially chose to armour the fuselage.

Mathematician Abraham Wald, however, had a simple question: where did the bullets hit the aircraft that did not make it back home? The other scientists and mathematicians had been looking at the issue from those aircraft that survived, and were therefore working from results skewed by survivorship bias. By focusing instead on the aircraft that never returned, the armour was placed around the engines, saving many aircraft and lives.

What Does This Have to do with Cybersecurity?

Survivorship bias is a constant. In cybersecurity, every piece of technology deployed or indicators of compromise that make up our detection capabilities are based upon known threats, or where we expect to see new attack surfaces.

While this approach may work in the short-term, purely reacting to expected risks prevents organizations from becoming truly secure by design.

How Do You Avoid Falling into This Trap?

Avoiding this common pitfall is dependent on making decisions about cybersecurity solutions and teams on the basis that you don’t have all the information available. The minute you start to rely on known threats you will be playing catch-up. A good example of this is the compromises that arose from the SolarWinds breach. Nobody was looking at their own security tools as a pivot point for the threat actor to gain access and start its lateral movement.

One of the best ways to avoid survivorship bias is to bring your penetration testing and security operations center (SOC) teams together regularly to identify new threats and attack scenarios. This is called the Medici Effect, where teams with little or no related experience collaborate to look at a problem from a different perspective. This tactic will always introduce a range of ideas on how to search for other weak points in an organization’s defenses.

Being cybersecurity conscious at all levels of the business and building in security from the start is a sure way of achieving security by design. A classic example of this is securing operational technologies (OT). If an organization’s security team is not talking to the engineers, they will have no insight of the risks to production systems - and, in turn, people’s safety.

The OT space is becoming more and more important for cybersecurity. Yet every factory, plant and network architecture varies and presents multiple weaknesses. As a result, the amount of unknown information is extensive. To overcome this, it’s important to work closely with engineering staff to understand the problems and find ways to improve your baseline security.

This approach can also be seen in security information and event management (SIEM) use cases, where starting with the baseline is key. Anything outside the normal should be investigated, as it could be a fault with the system. It’s good practice for security teams to work in this way, and to develop threat hunting strategies. Firstly, use your baseline and vendor use cases to pinpoint known issues within your organization. Then work with both your business and operations teams to understand what the biggest risks are. From here, you can start focusing your efforts on ways to protect them, whilst looking for anomalies outside of the business norm.

Conclusion: Proactive Cybersecurity

Another way to boost defenses is by relying on attack scenarios and threat patterns. These are considered to be one layer above SIEM use cases, as they give organizations a better overview of potential threats. Both drive a higher-level picture of attack methods, such as ransomware or phishing, and allow businesses to break threats down into component parts.

Beyond this, avoiding complacency at all costs is key to getting cybersecurity right. This involves assessing if you are getting the most from your tools and your team, requiring thinking ‘outside the box’. Importantly, security is as much a business and people issue as it is a technical one.

On the other hand, if you are currently satisfied with your security program and detection capabilities, it’s crucial to keep in mind that this could be compromised in the future. In other words, do not assume all is well and wait for an incident to expose gaps in capabilities. Test your solutions ahead of time to ensure they perform to a standard deemed acceptable. Maintain a constant review of what your security tools and teams are doing, what they’re capable of and keep asking, ‘what am I missing?’.

By being proactive and taking steps to avoid survivorship bias, organizations will be in a good position to embrace secure by design principles and ensure they are protected against a constantly shifting threat landscape.