The amount of information on the Internet is only equaled by the number of rich resources in any field. As the size and complexity of data sets on the web have grown, collecting and storing this information in useful formats has become increasingly important.

Like most things, bots excel at something. They are great at carrying out specific, routine tasks. However, such seemingly harmless processes can be used for both good and evil. For example, bots are often leveraged maliciously to perform content scraping on websites via the abuse of automated login tools. You repeatedly type an email address and password into a site until it finally “clicks.” This allows malicious users access to all the associated account information for that email address.

Although, at the time when web scraping is becoming legal, it is still a matter of great concern for many businesses that value the data information they have at their disposal. When you factor in the real financial losses they can cause to business owners and operators big-time, that’s seriously troubling.

For instance, in April 2021, researchers reported that the personal details of more than 500 million Facebook users were circulating on a cybercrime forum. Analysis of this incident showed that attackers used a handy trick called “web scraping” to get hold of the sensitive data.

In this article, we look at how web scraping attacks work, the impacts of such scraping bots and in what ways anti-bot protection help protect the website from scraping from a host of malicious bots.

So, What Is Web Scraping, Anyway?

Web Scraping is the automatic process of loading, crawling and extracting valuable data information from a website through bots.

If not performed ethically, there are many consequences of web scraping. The biggest concern is that it can violate someone else’s privacy (e.g., scraping financial information).

Web scraping could be used for illegal web crawling, such as stealing content and data, creating online accounts, online spamming, account takeover, stealing competitive pricing models, manipulating search results, etc.

How Does a Scraping Bot Work?

The first step in data scraping is to crawl and load all the HTML code that pertains to that page, including JavaScript and stylesheets. In the case of an application, a bot would be keener in retrieving data from a website’s application programming interface or API database.

Once crawled, the bot parses to extract information (products info, datasets insights, pricing information, etc.). The final step involves the web scraper outputting data, reformatting it and putting it into one or more formats – like CSV files or spreadsheets.

4 Business Impacts of Bot Attack on Websites or Applications

Even though we’re now in the digital era, companies still rely on conventional solutions to assess bots. Still, modern bots can act like humans and get past technologies such as CAPTCHAs and other older heuristics, and only a fully managed bot detection solution can control that.

Also, with the increasing number of smart devices in people’s homes, such as printers, monitors and light bulbs, it is becoming possible to use these objects as bots to stage cyber-attacks.

Let’s try to understand how such malicious bots are used to initiate unauthorized crawls and scrape data, costing business owners a hefty sum.

1) Data Harvesting to Scan Vulnerabilities to Exploit

Cybercriminals use bad bots to create malicious web traffic, scrape content and data, orchestrate many attacks on websites, gain illegal access to private information and snoop through content or scan applications for vulnerabilities to be exploited.

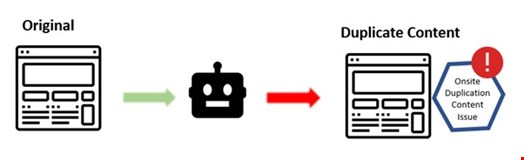

2) Content Duplicity Leading Loss of SEO and Authority

Highly reputable websites that target scraper bots often experience negative rankings due to duplicate, stolen content and issues related to copyright infringement. Any harm done by scraper bots from copying and then reposting your original content can greatly damage a website’s credibility and Google’s trust in the site overall, resulting in significant and adverse penalties.

3) Server Overload

Bot traffic can overload your server infrastructure by sending millions of requests to a specific path. This can cause end-users accessing the page to experience slowness and an overload of resources, leading to severe issues such as response time and server crashes.

4) Click Fraud Leading Bad Business Decisions

Click fraud is a form of ‘clickjacking’ to pretend to be legitimate visitors on a web page and click on adverts, buttons and other types of hyperlinks to generate phony traffic statistics to deceive advertisers.

The average yearly loss for SMEs due to click fraud during 2020 was $14,900 – according to The Global Click Fraud Report 2021

Automated likes or upvotes to a post that doesn’t reflect the nature of the blog post itself are considered click frauds.

If a cyber-criminal has control over a botnet or is using hijacked IP addresses to carry out click fraud on an internet network, they can perpetuate their crimes on a large scale.

Such confusing web and click data metrics can be challenging to determine which traffic is from a real user’s click and a bot.

Web Scraping Bot Detection – How Bot Management Solutions Help

A bot mitigation solution can identify your visitor behavior, which shows signs of bot activity in real-time and automatically blocks the bad bots before scraping attacks exploits while ensuring a smooth experience for human users.

With the Indusface bot mitigation software solution, you can control how it allows legitimate and malicious bots to access your web service. Leveraging machine learning and bot intelligence techniques, your business is constantly secured against known web scrapers and other emerging bot attacks.

Being fully configurable with custom rules, it can be easily installed on a server, offering multi-layered, real-time protection against the sophistication of botnet attacks.