The use of AI in the consumer market has exploded like never before, driven by the launch of general-purpose AI tools like OpenAI’s ChatGPT and Stability AI’s Stable Diffusion in 2022. In parallel, however, the same tools have increasingly been used for malicious purposes, leading governments to speed up their regulatory roadmaps.

Sam Altman, OpenAI’s CEO, even called for the creation of a licensing agency for AI companies in front of a US Senate committee on May 16, 2023.

This comes two months after ChatGPT encountered a significant setback in March, when Italy’s data protection agency (GPDP) temporarily banned it in the country.

Behind the ban was a complaint pointed out by the GPDP regarding the absence of a legal basis to justify "the mass collection and storage of personal data for the purpose of 'training' the algorithms underlying [ChatGPT’s] operation.”

While OpenAI resumed its services on April 28 after implementing additional privacy disclosures and controls, ChatGPT is now being investigated by other data protection authorities, including Canada’s Privacy Commissioner and the European Data Protection Board, the latter of which launched a dedicated task force dedicated to OpenAI’s service on April 14.

Along with privacy, the explosion of generative AI tools has also brought to the fore many security concerns.

One fear is that those tools can spread misinformation by scraping the internet indiscriminately to build the AI models. This prompted the UK’s Competition and Markets Authority (CMA) to launch a review of the AI market on May 4.

Additionally, generative AI tools are able to provide legitimate-looking content, primarily text and images, which has been leveraged for malicious purposes generating malware, deep fakes, drug-making recipes and phishing kits. It is possible to hack the restrictions within the system by using creative prompts designed to work around the rules and guardrails implemented by the developers – a practice called ‘jailbreaking.’

EU Leading the Regulatory Way

AI regulation has already been in the pipeline for a number of years in some countries. For instance, the EU’s AI Act was introduced in April 2021 and Canada’s AI and Data Act in June 2022.

The latest draft of the EU regulation, approved by the Internal Market Committee and the Civil Liberties Committee on May 11, showed that the adoption of generative AI models has already influenced the text.

On top of initial proposals, which revolve around adding safeguards to biometric data exploitation, mass surveillance systems and policing algorithms, the updated draft introduced new measures to control “foundational models.”

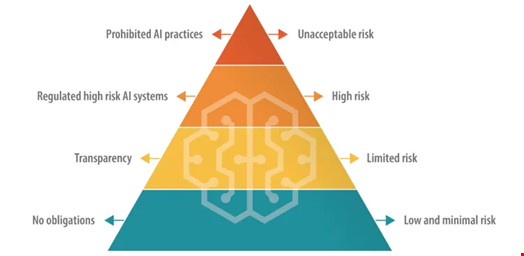

Under the proposed legislation, “general purpose AI models” would be classified into four categories based on their level of risk: low and minimal risk, limited risk, high risk and unacceptable risk. The first category would be unregulated and developers of AI models with limited risk will only be required to be transparent in how they work.

High-risk AI models, however, would be highly regulated. This includes creating a database of general-purpose and high-risk AI systems to explain where, when and how they’re being deployed in the EU.

“This database should be freely and publicly accessible, easily understandable, and machine-readable. It should also be user-friendly and easily navigable, with search functionalities at minimum allowing the general public to search the database for specific high-risk systems, locations, categories of risk [and] keywords,” says the draft.

AI models involving unacceptable risk would be banned altogether.

Read more: BatLoader Impersonates ChatGPT and Midjourney in Cyber-Attacks

This draft has been praised by digital rights advocacy group European Digital Rights and NGO Access Now, which called it “a victory for fundamental rights.”

Daniel Leufer, a senior policy analyst at Access Now, said in a public statement, “Important changes have been made to stop harmful applications like dangerous biometric surveillance and predictive policing, as well as increasing accountability and transparency requirements for deployers of high-risk AI systems. However, lawmakers must address the critical gaps that remain, such as a dangerous loophole in [the] high-risk classification process.”

Little Known About Canada’s AI & Data Act

Much less is known about Canada’s counterpart legislation, the AI and Data Act, part of the federal Bill C-27 for the Digital Charter Implementation.

According to a companion document published in March, however, it seems that the country’s strategy will differ from the EU’s approach as it does not ban the use of automated decision-making tools, even in critical areas. Instead, under the Canada’s regulation, developers would have to create a mitigation plan to reduce risks and increase transparency when using AI in high-risk systems.

According to four lawyers from law firm DLA Piper, “This companion paper fills in some, but not all, of the gaps and uncertainty raised in the proposed AIDA, which is drafted to leave much of its details to be worked out later. [For instance], while it outlines that the regulatory impositions would be proportionate to the risk based on international standards, the details are not spelled out.”

US, UK Take Slow Approach

In the US, the government has yet to pass federal legislation governing all AI applications.

Instead, the Biden administration and a few federal agencies have published non-binding guidance, including the October 2022 Blueprint for an AI Bill of Rights, which addresses concerns about AI misuse and provides recommendations for safely using AI tools in both the public and private sectors, and the National Institute of Standards and Technology’s (NIST) AI Risk Management Framework 1.0, launched in January 2023.

In 2022, several US states also started looking into AI legislation, some focusing on the private sector and others on public-sector AI use. Also, New York City introduced one of the first AI laws in the country, effective from January 2023, which aims to prevent AI bias in the employment process.

With the rise of generative AI use – and misuse – privacy and security advocacy organizations increasingly urge the US government to act. For example, the Center for AI and Digital Policy filed a complaint in March 2023 urging the US Federal Trade Commission (FTC) to prevent OpenAI from launching new commercial versions beyond its present iteration, GPT-4, and ensure the establishment of necessary guardrails to protect consumers, businesses, and the commercial marketplace.

Meeting the Founders

Many other countries, including the UK, China and Singapore, have not yet announced any AI governance legislation similar to the EU’s AI Act and Canada’s AI & Data Act, according to Data Protection and Fairness in AI-Driven Automated Data Processing Applications, a regulatory overview published by Onetrust in May 2023.

Instead, the UK government said in March it was taking “a pro-innovation approach to AI regulation” and announced in April that it would invest £100m ($125m) to launch a Foundation Model Taskforce, which is hoped will help spur the development of AI systems that can boost the nation's GDP.

Michael Schwarz, chief economist at Microsoft, ChatGPT’s main backer, said during the World Economic Forum Growth Summit on May 3 that regulators “should wait until we see [any] harm before we regulate [generative AI models].”

The next day, US Vice President Kamala Harris met with CEOs of four American companies involved in the development of generative AI – Alphabet, Anthropic, Microsoft, and OpenAI – in a bid from the Biden administration to announce new measures to promote ‘responsible’ AI.

The details of this conversation were not revealed, but, when he testified before a US Senate committee on May 16, OpenAI’s Altman was much more open to regulating AI than Schwarz and acknowledged the potential dangers his product could have on jobs and democracy.

He said AI companies like his should be independently audited and should be given licenses to operate by a dedicated government agency.