In a new proof-of-concept, endpoint security provider Morphisec showed that the Exploit Prediction Scoring System (EPSS), one of the most widely used frameworks for assessing vulnerability exploits, could itself be vulnerable to an AI-powered adversarial attack.

Ido Ikar, a Threat Researcher at Morphisec, published his findings in a blog post on December 18.

He demonstrated how subtle modifications to vulnerability features can alter the EPSS model's predictions and discussed the implications for cybersecurity.

Background on the EPSS Model

The EPSS model was developed by a special interest group within the Forum of Incident Response and Security Teams (FIRST), a non-profit, and made public in April 2020. This group included researchers, practitioners, academics and government personnel who collaborate to improve vulnerability prioritization.

Described as “a groundbreaking model” by Morphisec’s Ikar, EPSS is a framework organizations can use to evaluate the probability that a software vulnerability has been exploited in the wild.

It empowers organizations to prioritize those with the highest exploitation risks and enables them to allocate their resources where they matter most.

EPSS predicts exploitation activity using a set of 1477 features that capture various aspects of each Common Vulnerabilities and Exposures (CVE) entry. These features are fed into a machine learning model called XGBoost, which uses them to predict the probability of exploitation.

Read more: Navigating the Vulnerability Maze: Understanding CVE, CWE, and CVSS

Manipulating EPSS Output with Adversarial Attack

The objective of Ikar’s proof-of-concept was to manipulate the probability estimate provided as output when using the EPSS for a chosen vulnerability.

To perform his adversarial attack, Ikar artificially inflated probability indicators for this vulnerability to manipulate the model’s output. He chose to target two specific data categories the EPSS model relies on: social media mentions and public code availability.

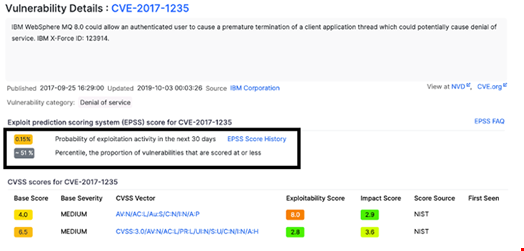

He tested this technique on an old vulnerability in IBM WebSphere MQ 8.0 (CVE-2017-1235).

“Prior to the attack, the EPSS for CVE-2017-1235 indicated a predicted exploitation probability of 0.1, placing it in the 41st percentile for potential exploitation among all assessed vulnerabilities,” said Ikar. “This relatively low score suggested that, according to the EPSS model, it was not a high-priority target for exploitation based on its existing activity indicators.”

He also noted that he selected a vulnerability for which no exploit code was available on GitHub and that had minimal mentions on X.

“This allowed me to better assess the impact of artificially increasing these signals,” he explained.

First, Ikar generated random tweets discussing CVE-2017-1235 using ChatGPT. These tweets were intended to mimic authentic mentions of the vulnerability and increase its social media activity score in EPSS.

Second, he created a GitHub repository labeled ‘CVE-2017-1235_exploit,’ which contained a simple, empty Python file with no actual exploit functionality.

Following the injection of artificial activity through generated social media posts and the creation of a placeholder exploit repository, the EPSS model's predicted probability for exploitation increased from 0.1 to 0.14. Additionally, the percentile ranking of the vulnerability rose from the 41st percentile to the 51st percentile, pushing it above the median level of perceived threat.

EPSS Alone Vulnerable to Attacks

Ikar commented: “The results highlight a potential vulnerability in the EPSS model itself. Since the model relies on external signals like social media mentions and public repositories, it can be susceptible to manipulation. Attackers could exploit this by artificially inflating the activity metrics of specific CVEs, potentially misguiding organizations that depend on EPSS scores to prioritize their vulnerability management efforts.”

However, he also noted that this was only a proof-of-concept and that further exploration is needed. “It remains to be seen how robust these changes are over time or whether additional model safeguards could be implemented to detect such artificial patterns,” he added.

Yet, the researcher believes that this successful experiment should prompt organizations to adopt a proactive approach when using EPSS by consistently monitoring probability scores and complementing the use of EPSS with other metrics and risk assessment procedures.

“Any significant changes in these scores should prompt a deeper investigation to understand the underlying reasons and assess whether the shift is legitimate or potentially manipulated. Relying on multiple data points and cross-referencing model outputs ensures a more comprehensive and robust decision-making process,” he concluded.

This experiment also highlighted that all machine learning and AI models can be vulnerable.

Read now: Beyond Disclosure – Transforming Vulnerability Data Into Actionable Security