Business leaders are becoming increasingly attracted to Robotic Process Automation (RPA), which can be quickly deployed to save time and money across business units.

In many cases, RPA is being used for handling sensitive customer information, accounting and automating repetitive tasks to eliminate human errors, protect privacy and shift resources to more strategic activities.

Despite the sensitivities of the information being handled by RPA, security is seldom a focus in RPA projects and security leaders are consulted sporadically, if at all, during development. With citizen developers leading the charge and creating RPA scripts for themselves, there is a higher probability of projects being deployed with security risks. The two main risks are data leakage and fraud, which makes proper governance, including security, essential to mitigating a number of serious issues.

Without proper security measures in place, the sensitive data, such as RPA bot credentials or customer information, can be exposed to attackers and, especially, malicious insiders. Furthermore, insiders can take advantage of the RPA access rights to insert fraudulent actions into the RPA scripts.

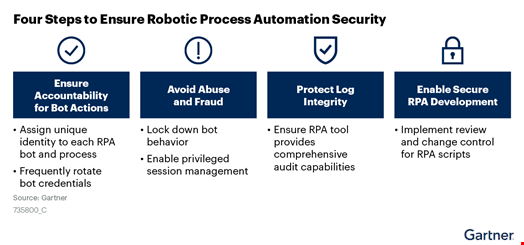

To address security failures in RPA projects, security and risk management leaders need to follow a four-step action plan:

Ensure Accountability for Bot Actions

Bot operators are employees responsible for launching RPA scripts and dealing with exceptions. Sometimes, in the rush to deploy RPA and see immediate results, organizations will not distinguish between the bot operators and the bot identities. The bots are run using human operator credentials.

This configuration makes it unclear when a bot conducted a scripted operation versus when a human operator took an action. It becomes impossible to unequivocally attribute actions, mistakes and, most importantly, attacks or fraudulent actions.

Using human operator credentials with bots also prevents increasing passcode complexity and frequency of rotation. These need to be limited to what is reasonable human user experience, rather than what a bot can handle. This eases brute force attacks and consequent data leakages.

Assign a Unique Identity to Each RPA Bot and Process

Bots should have dedicated identification credentials whenever possible. Identity naming standards should also distinguish between human and bot identities wherever possible. This allows you to track who may be responsible for scripts that use a robotic identity. One example could be assigning B-123-X as an identity for the bot 123 operating a task called X. In addition, audit trails (logs) should provide the information that “a particular user asked bot B-123 to carry out task X.”

Avoid Abuse and Fraud from Breaks in Segregation of Duties

Even the most careful implementation of RPA can lead to an increase in account privileges, thereby increasing the risk of fraud. Take, for example, an organization where two human operators, A and B, have access to Systems X and Y, respectively. If the tasks of operators A and B are replaced by an RPA tool, then the RPA bot must have access to both Systems X and Y.

Creating two separate bots with separate credentials and entitlements can mitigate the issue. However, the segregation of duties problem persists with a human operator overseeing the RPA operations for both bots. For example, a supervisor for payment processes can create fake provider accounts with on bot and scheduled payments to the account with the other. Because the operation is conducted by a bot, it is less likely to be detected.

Ensure Close Monitoring and Fraud Management, Especially Where Breaks in Segregation of Duties Are Unavoidable

Manual processes are often used today to decrease the risk of fraud with RPA. Organizations must identify points in their automated processes that are susceptible to fraud and ensure that there is independent review of all related transactions. In these cases, the maker-checker principle (or the four-eyes principle) is also used for authorization. Certain RPA tools provide this feature; for example, RPA transactions over a certain threshold trigger another bot to verify the correctness of the operation before approving it.

Protect Log Integrity and Ensure Nonrepudiation

Whenever there is an RPA security failure, the security team will need to review logs. A log, or audit trail, of RPA activity is paramount to ensuring nonrepudiation and to enable an investigation when needed. RPA tools provide logging of the actions a bot has taken in the applications it has accessed.

Enable a Secure RPA Development Process

To speed up deployment, organizations tend to postpone security considerations until RPA scripts are ready to run. This approach allows security flaws not simply in scripts, but also in the entire approach to RPA, to go undetected until it is too late. As RPA usage increases, manual script review can become overwhelming. Speed and scalability in digital business are key so we’ve dedicated a full track of sessions at the Gartner Security & Risk Management Summit to help risk leaders keep up with the exponential expansion of IT infrastructure.

Implement Change Control for Scripts

Periodically review and test RPA scripts with a special focus on business logic vulnerabilities. In most cases, this review will take place in a peer review fashion, whenever there is change in the script. Some application security and penetration testing vendors are starting to offer assessments as well.

Use Caution When Utilizing Free Versions of RPA Tools With Sensitive Data

Often, free versions of RPA tools are intended only for trials and do not provide security functionality.

If you liked this article, be sure to check out this upcoming Online Summit session: